OpenClaw: AI Agent That Ships Code While You Sleep (2026)

Bradley Herman

When PostgreSQL can't handle your analytics workload, you need a specialized database. Your application processes millions of user events daily, dashboard queries timeout, and users notice sluggish performance. Traditional row-based databases excel at transactional workloads: retrieving individual user records or processing orders, but struggle when aggregating millions of rows for analytical queries.

OLAP (Online Analytical Processing) databases solve this problem. Unlike OLTP systems that optimize for individual record operations, OLAP databases organize data for analytical workloads: calculating averages across millions of transactions, generating reports from historical data, or powering real-time dashboards.

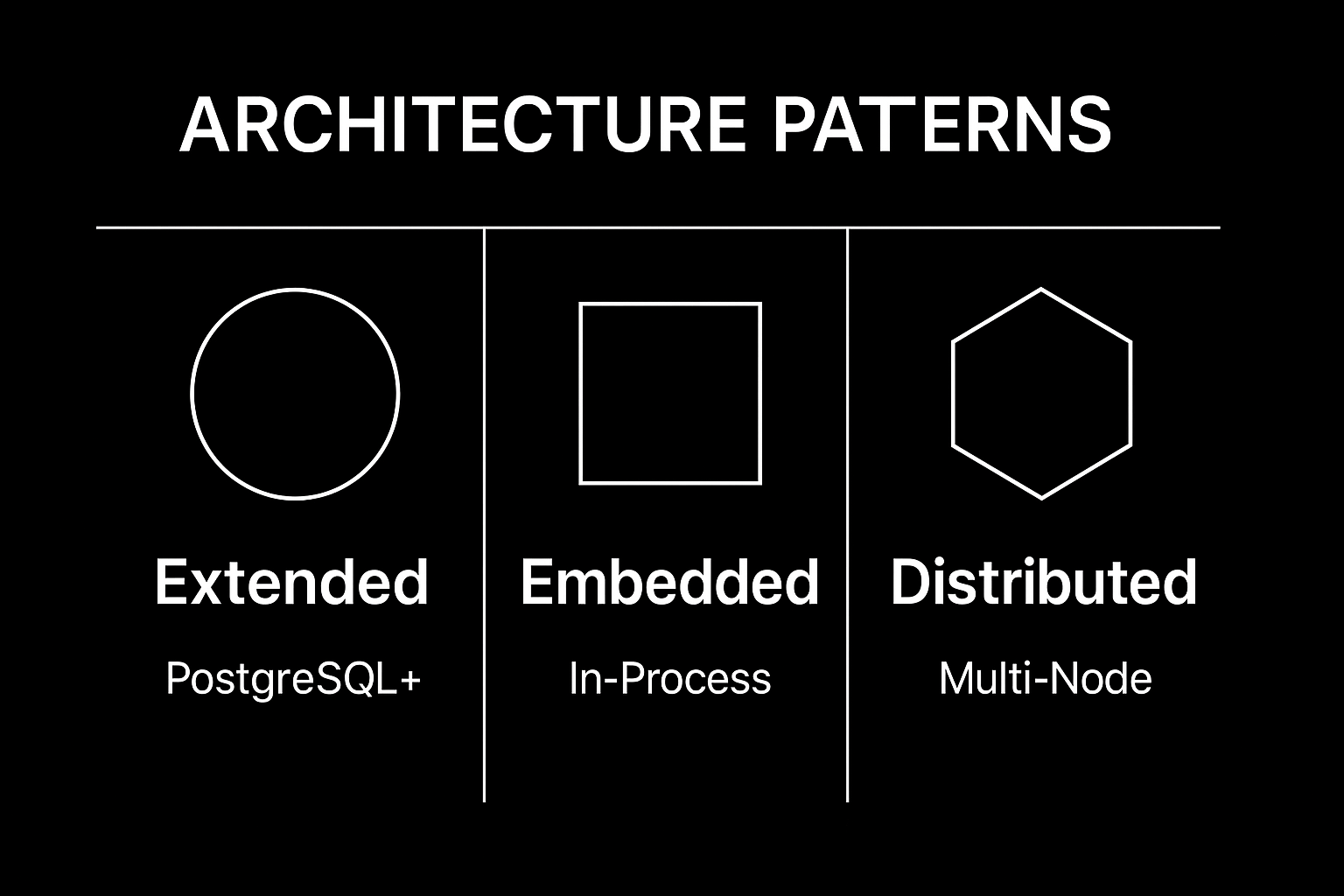

The OLAP landscape offers diverse solutions for different problems. Some databases embed directly within your application process, others require distributed clusters, and still others extend familiar tools with specialized optimizations. Not all OLAP databases solve the same problems.

Understanding the architectural trade-offs between embedded, extended, and distributed approaches is critical for making informed decisions. The "best" analytics database depends on your specific requirements: dataset size, query complexity, operational expertise, and infrastructure constraints. Many successful teams use multiple OLAP solutions for different purposes rather than seeking a universal answer.

This comparison examines three different approaches to analytical databases: TimescaleDB (PostgreSQL extended for time-series), DuckDB (embedded analytical processing), and ClickHouse (distributed columnar analytics). Each represents a distinct philosophy for solving analytical challenges, with clear strengths and limitations that align with specific use cases and team capabilities.

We're examining three analytical databases that solve overlapping but distinct problems in modern data infrastructure:

TimescaleDB transforms PostgreSQL into a time-series optimized database through automatic partitioning and hybrid storage. It maintains full PostgreSQL compatibility while adding time-series functions and compression capabilities.

DuckDB operates as an embedded analytical database that runs directly within your application process, similar to SQLite but optimized for analytical workloads. It excels at querying files directly from cloud storage without traditional ETL processes.

ClickHouse provides distributed columnar storage designed for real-time analytics at massive scale. It sacrifices transactional capabilities for analytical performance across petabyte datasets.

Our evaluation criteria focus on practical engineering decisions:

These criteria reflect real-world trade-offs that technical teams encounter when evaluating analytical infrastructure. Performance alone doesn't determine the right choice: operational complexity, team expertise, and integration patterns often matter more than raw query speed.

Different Architectures

Different Architectures

Quick verdict: "Best for teams already using PostgreSQL who need time-series performance"

TimescaleDB represents a pragmatic approach to analytical databases: extending proven PostgreSQL architecture with time-series optimizations rather than building new systems. This strategy eliminates the learning curve and operational overhead of adopting unfamiliar database technology while delivering measurable performance improvements.

Architecture and Core Innovation

TimescaleDB operates as a native PostgreSQL extension that integrates deeply with PostgreSQL's query planner and storage systems. The core innovation is hypertables: virtual tables that present a unified SQL interface while automatically partitioning data into time-based "chunks" behind the scenes.

Each chunk represents a time range (typically 7 days) and operates as a standard PostgreSQL table with its own indexes and optimization strategies. This architecture enables TimescaleDB to skip irrelevant chunks during queries, dramatically reducing I/O requirements for time-based filtering. When querying the last 24 hours from a billion-row dataset, TimescaleDB only scans chunks containing recent data rather than the entire table.

The hybrid storage system, called Hypercore, automatically transitions older chunks from row-based to columnar storage through configurable policies. This provides up to 90% storage compression on historical data while maintaining transaction performance on recent data.

Key Features and Capabilities

TimescaleDB's automatic partitioning works transparently through simple SQL commands. Converting a standard PostgreSQL table to a hypertable requires a single function call:

CREATE TABLE sensor_readings (

time TIMESTAMPTZ NOT NULL,

sensor_id INTEGER,

temperature DOUBLE PRECISION,

humidity DOUBLE PRECISION

);

SELECT create_hypertable('sensor_readings', 'time');TimescaleDB delivers several key capabilities:

These features transform PostgreSQL into a time-series powerhouse while preserving full SQL compatibility.

Continuous aggregates materialize aggregations incrementally and combine cached results with live data. These materialized views automatically refresh as new data arrives, providing sub-second dashboard updates on billion-row datasets.

Performance Characteristics

Production benchmarks demonstrate TimescaleDB's practical benefits. The system delivers 1,107,070 metrics per second (110,707 rows per second) during bulk loading operations with eight concurrent workers. Complex analytical queries show median latency of 757ms for aggregations across large datasets, representing 1,000× performance improvements over vanilla PostgreSQL through intelligent chunk exclusion.

Azure study of oil and gas monitoring systems shows TimescaleDB maintaining consistent insert performance over time, while vanilla PostgreSQL experienced 60-70% throughput degradation under continuous load.

Advantages and Strengths

The primary advantage lies in PostgreSQL compatibility. Teams with existing PostgreSQL expertise face no learning curve: all familiar SQL operations, administrative tools, and operational procedures continue working unchanged. Full ACID compliance enables mixing transactional and analytical workloads within the same database, supporting applications that combine real-time data ingestion with complex analytical requirements.

The mature PostgreSQL ecosystem provides proven solutions for monitoring, backup, replication, and high availability. Rather than learning operational procedures, teams leverage existing PostgreSQL knowledge and infrastructure.

Limitations and Considerations

TimescaleDB deprecated clustering capabilities in version 2.14, limiting write scaling to single-node PostgreSQL performance. While read replicas enable analytical scaling, write-heavy workloads requiring horizontal scaling may need alternative architectures.

Operational complexity inherits PostgreSQL's requirements including vacuum management, connection pooling, and replication configuration. Optimal performance depends on understanding TimescaleDB metrics like chunk exclusion rates and continuous aggregate refresh patterns alongside standard PostgreSQL monitoring.

The dual licensing model uses Apache 2.0 for core functionality and Timescale License (TSL) for advanced features like compression. TSL terms prohibit offering TimescaleDB as a managed service without commercial licensing, affecting service provider deployments.

Ideal Use Cases

TimescaleDB excels for IoT sensor networks requiring both high-volume data ingestion and complex analytical capabilities. Financial trading systems leverage its ability to handle real-time market data while supporting analytical queries across historical datasets.

Manufacturing analytics scenarios demonstrate TimescaleDB's strength in combining time-series sensor data with relational information about equipment, maintenance schedules, and operational contexts. These mixed workloads benefit from PostgreSQL's full SQL capabilities while gaining time-series performance optimizations.

Quick verdict: "Best for embedded analytics and ETL pipelines without external dependencies"

DuckDB solves a problem in the analytical database landscape: providing fast columnar processing directly within applications without managing separate database servers. This embedded approach eliminates network overhead, simplifies deployment, and enables analytical capabilities in environments where traditional database infrastructure isn't practical.

Architecture and Embedded Design

DuckDB runs in-process within your application, similar to SQLite but optimized for analytical workloads rather than transactions. The embedded architecture means zero-configuration deployment: no database servers to manage, no connection pooling to configure, and no network protocols to secure.

The system combines columnar storage with vectorized execution, processing data in fixed-size batches (typically 1024-2048 items) that fit within CPU L1 cache. This approach maximizes instruction throughput through SIMD operations while minimizing memory access latency. When calculating averages across millions of rows, DuckDB can perform multiple operations simultaneously rather than processing values individually.

Columnar storage provides advantages for analytical queries. Only required columns are read during query execution, and similar data types compress more efficiently than mixed row data. Zone map acceleration enables entire row groups to be skipped during filtering operations without scanning them.

Key Features and Zero-Copy Processing

DuckDB's most compelling feature for application developers is zero-copy file processing: querying CSV, Parquet, and JSON files directly from cloud storage without importing them into the database first. This capability transforms ETL workflows from complex data movement operations into simple SQL queries:

-- Query CSV files directly from S3

SELECT AVG(sales_amount) FROM read_csv_auto('s3://bucket/sales_data.csv');

-- Process multiple Parquet files

SELECT date, SUM(revenue) FROM read_parquet('gs://analytics/revenue/*.parquet')

GROUP BY date ORDER BY date;

-- Combine formats in single query

SELECT c.customer_name, SUM(s.amount)

FROM read_csv_auto('customers.csv') c

JOIN read_parquet('sales.parquet') s ON c.id = s.customer_id

GROUP BY c.customer_name;DuckDB provides several key capabilities:

This combination transforms data processing workflows by removing infrastructure requirements while delivering analytical performance.

The extension system adds capabilities without bloating the core database. Extensions for spatial data, full-text search, and cloud storage authentication can be installed as needed.

Performance and Resource Efficiency

DuckDB demonstrates 3-5× faster performance than PostgreSQL for analytical queries on single-node workloads. The vectorized execution engine automatically parallelizes operations across all available CPU cores without requiring configuration.

Compression ratios of 3:1 or better are common with real-world datasets, reducing both storage requirements and I/O costs. The system can process datasets up to several hundred gigabytes on typical hardware configurations, with query response times measured in seconds rather than minutes.

Benchmark tests comparing DuckDB, SQLite, and Pandas on 1 million row datasets showed DuckDB consistently achieving the fastest execution times for GROUP BY operations and complex aggregations.

Advantages and Deployment Simplicity

The embedded architecture eliminates infrastructure complexity. Applications can include analytical capabilities by adding DuckDB as a dependency, similar to including any other library. This approach works well for SaaS applications that need embedded analytics, dashboard APIs, or data transformation capabilities.

Integration with modern data science tools is seamless. DuckDB provides native Arrow compatibility for zero-copy data exchange, Pandas integration for existing Python workflows, and direct connectivity to popular visualization tools.

Limitations and Production Challenges

Memory management represents DuckDB's most operational challenge. Out-of-memory errors occur in production environments with complex queries or large datasets. The system lacks memory management features found in enterprise databases, requiring careful query design and memory limits.

The single-node architecture prevents horizontal scaling and creates limitations for large datasets. Concurrency constraints allow multiple readers but only single-writer operations, limiting its applicability for high-concurrency analytical workloads.

Production stability concerns include documented memory corruption issues and connection initialization slowdowns with very large databases (15TB+). These issues require application-level monitoring and automatic restart capabilities to maintain service reliability.

Optimal Applications and Use Cases

DuckDB excels for embedded analytics in SaaS applications where users need analytical insights without complex infrastructure. Dashboard APIs that process user data for real-time reports benefit from DuckDB's ability to query files directly and return results quickly.

ETL pipeline replacement represents another strong use case. Instead of writing Python or Node.js code to parse and transform CSV files, developers can process them directly with SQL queries, reducing code complexity while improving performance.

Data science workflows benefit from DuckDB's ability to query files during development and testing phases. Analysts can explore large datasets with familiar SQL syntax without importing data into traditional databases or configuring complex infrastructure.

Quick verdict: "Best for real-time analytics at scale with high-concurrency requirements"

ClickHouse represents the high-performance extreme of analytical database architecture, trading operational complexity for query speed and cost efficiency. Born from Yandex's need to process billions of web analytics events in real-time, ClickHouse optimizes every aspect of its design for analytical workloads at scale.

Architecture and Distributed Design

ClickHouse employs a shared-nothing distributed architecture where each node operates independently with local storage and compute resources. This enables massive parallel processing (MPP) where complex queries are broken down and executed across multiple nodes simultaneously.

The columnar storage engine processes data in blocks of 65,536 rows, utilizing CPU SIMD instructions for parallel operations. When aggregating revenue across millions of transactions, ClickHouse can perform multiple calculations simultaneously rather than processing values sequentially.

The MergeTree engine family provides the foundation for ClickHouse's performance through variants optimized for different use cases. Standard MergeTree offers basic columnar storage with sorting and partitioning, while ReplicatedMergeTree adds multi-replica consistency for high availability, and SummingMergeTree pre-aggregates numerical columns during background merge operations.

Performance Characteristics and Scalability

ClickHouse delivers performance metrics that define its position as one of the top choices for OLAP DBs. TPC-H results show 8,500 QphH@1TB on AWS c6a.metal instances (96 vCPU, 192 GiB RAM), with full-table scan rates reaching ~120 GB/s on optimized hardware configurations.

Sub-second response times on billion-row datasets make real-time user-facing analytics feasible at scale. Complex analytical queries that would take minutes in traditional databases complete in milliseconds, enabling interactive dashboards and real-time business intelligence applications.

The system handles thousands of concurrent analytical queries while maintaining consistent performance, making it suitable for user-facing applications where many users simultaneously access analytical data.

Key Features and Real-Time Capabilities

Native streaming ingestion supports multiple data sources without complex ETL infrastructure. Kafka integration enables continuous processing of message streams, while HTTP and TCP interfaces support real-time JSON inserts for web applications and high-performance binary protocols for maximum throughput.

ClickHouse provides several key capabilities:

These features enable continuous data processing without traditional batch limitations.

Materialized views enable real-time transformations:

CREATE MATERIALIZED VIEW daily_user_stats TO user_summary AS

SELECT

toDate(event_time) as date,

user_id,

COUNT(*) as event_count,

SUM(revenue) as total_revenue

FROM user_events

GROUP BY date, user_id;SQL Compatibility and Extensions

ClickHouse provides comprehensive SQL support based on ANSI standards with analytical extensions. Standard clauses (SELECT, INSERT, CREATE, GROUP BY, ORDER BY), JOIN operations, subqueries, and window functions work as expected, making it accessible to developers familiar with traditional databases.

The key difference lies in the mandatory ENGINE clause when creating tables, which determines how data is stored and accessed:

CREATE TABLE user_events (

user_id UInt64,

event_time DateTime64(3),

event_properties Map(String, String),

revenue Decimal(10,2),

tags Array(String)

) ENGINE = MergeTree()

ORDER BY (user_id, event_time);Advantages and Cost Efficiency

ClickHouse delivers 3-5× cost advantages over managed cloud solutions like Snowflake and BigQuery due to superior compression ratios and resource efficiency. Benchmark studies comparing ClickHouse to Amazon Timestream showed 6,000× higher insert throughput, 175× faster aggregation queries, and 220× lower storage costs.

High-concurrency analytical capabilities enable user-facing applications that would be impractical with traditional analytical databases. Thousands of users can simultaneously execute complex queries without degrading system performance.

Limitations and Operational Complexity

ClickHouse requires operational expertise for production deployments. Multi-node cluster management involves complex configuration of distributed consistency, replication, and query coordination. Resource management and query optimization require knowledge beyond typical database administration.

The system lacks ACID transaction support and struggles with concurrent write operations. Updates and deletes are expensive operations requiring full table rewrites, making ClickHouse unsuitable for workloads requiring frequent data modifications.

Complex multi-table JOINs perform poorly due to ClickHouse's rule-based query planner, limiting its effectiveness for workloads requiring extensive data normalization or complex relational operations.

Production issues include distributed consistency challenges where multi-node deployments don't guarantee read-after-write consistency, potentially causing temporary data discrepancies. Memory allocation and query optimization failures can impact system stability without monitoring and tuning.

Enterprise Use Cases and Applications

Real-time user-facing analytics dashboards represent ClickHouse's primary strength. Companies like Cloudflare use ClickHouse to power analytics platforms serving millions of users with sub-second response times on massive datasets.

Clickstream analytics benefits from ClickHouse's ability to ingest high-velocity event streams while supporting complex analytical queries. Web analytics platforms, application performance monitoring systems, and IoT time-series analysis leverage ClickHouse's throughput capabilities.

High-concurrency analytical workloads that require serving thousands of users simultaneously with consistent performance represent scenarios where ClickHouse's architecture provides clear advantages over alternative solutions.

| Feature | TimescaleDB | DuckDB | ClickHouse |

|---|---|---|---|

| Architecture | PostgreSQL extension | Embedded in-process | Distributed columnar |

| Scaling Pattern | Vertical + read replicas | Single-node only | Horizontal multi-node |

| OLAP Performance | Excellent (time-series) | Very Good (single-node) | Excellent (distributed) |

| OLTP Support | Full (PostgreSQL) | Limited transactions | None |

| Deployment Model | Client-server | Embedded library | Client-server cluster |

| Operational Complexity | Medium (PostgreSQL) | Low (zero-config) | High (distributed systems) |

| SQL Compatibility | Full PostgreSQL | Full ANSI SQL | Extended analytical SQL |

| Typical Dataset Size | TB scale | GB-TB scale | TB-PB scale |

| Write Concurrency | High (ACID) | Single writer | Limited (eventual consistency) |

| Setup Time | Hours (PostgreSQL) | Minutes (embedded) | Days-Weeks (cluster) |

| Memory Requirements | Moderate | Careful management needed | High (distributed) |

| Cost Efficiency | Medium | High (no infrastructure) | High (at scale) |

| Learning Curve | Low (PostgreSQL knowledge) | Low (standard SQL) | High (knowledge required) |

Choosing between these analytical databases requires evaluating your requirements against each system's architectural strengths and limitations.

Choose TimescaleDB if:

Your team already operates PostgreSQL infrastructure and needs time-series analytics with full SQL capabilities. This choice makes sense when ACID compliance matters, you need to mix relational and time-series workloads, and your dataset size ranges in the terabyte scale.

The operational familiarity advantage cannot be overstated. Teams with PostgreSQL expertise face no learning curve and can leverage existing monitoring, backup, and high-availability infrastructure. Time-series functions like time_bucket, gap detection, and interpolation provide immediate value for IoT sensor networks, financial trading systems, and manufacturing analytics.

TimescaleDB works well when you need continuous aggregates for real-time dashboards while maintaining the ability to execute complex analytical queries across historical data. The hybrid storage system provides 90% compression on old data while maintaining transaction performance on recent data.

Dataset size considerations favor TimescaleDB for single-node workloads up to several terabytes. Beyond this scale, the single-node write limitation becomes constraining, and distributed alternatives become more attractive.

Choose DuckDB if:

You need embedded analytics within applications without external database dependencies, or you're building ETL pipelines that benefit from direct file querying capabilities. DuckDB excels when you want to avoid operational overhead and can work within single-node performance constraints.

The zero-configuration deployment makes DuckDB ideal for SaaS applications providing analytical features to customers, dashboard APIs serving real-time insights, and data transformation workflows that replace complex parsing code with SQL queries.

Direct cloud storage querying transforms data processing workflows. Instead of building ETL infrastructure to move data between systems, applications can query CSV, Parquet, and JSON files directly from S3, GCS, or Azure Blob Storage.

Single-node performance suffices for datasets up to hundreds of gigabytes with query response times measured in seconds. The embedded architecture prevents horizontal scaling but eliminates infrastructure complexity.

Memory management requires careful attention in production environments. Applications must implement monitoring for out-of-memory conditions and automatic restart capabilities to maintain service reliability.

Choose ClickHouse if:

You need sub-second queries on multi-terabyte datasets, real-time user-facing analytics with high concurrency requirements, or cost efficiency at petabyte scale with teams that have distributed systems expertise.

ClickHouse makes sense for applications serving thousands of concurrent users with analytical queries, where traditional databases would struggle under the load. Real-time streaming ingestion capabilities enable continuous processing of high-velocity event streams without batch processing delays.

The cost efficiency becomes compelling at scale. Independent analysis shows ClickHouse delivering 3-5× cost advantages over managed cloud solutions while providing superior performance characteristics.

Operational complexity requires dedicated expertise. Teams need understanding of distributed systems, cluster management, query optimization, and monitoring practices. This investment pays off for applications with massive analytical requirements but may not justify the complexity for smaller workloads.

Dataset sizes in the terabyte to petabyte range represent ClickHouse's sweet spot. The distributed architecture scales horizontally across multiple nodes while maintaining query performance through intelligent data distribution and parallel processing.

The decision framework isn't about finding the "best" database but rather matching system capabilities with your needs. Teams with PostgreSQL expertise benefit from TimescaleDB's time-series optimizations. Applications requiring embedded analytics without infrastructure complexity choose DuckDB. Organizations processing massive datasets with distributed systems expertise select ClickHouse.

Sergey Kaplich