OpenClaw: AI Agent That Ships Code While You Sleep (2026)

Bradley Herman

You're debugging a gnarly race condition in your React app. State updates are firing out of order, the useEffect cleanup isn't cleaning up, and somewhere between the API call and the render, data is disappearing into the void.

You paste the component into your AI coding assistant. You describe the bug. You hit enter.

The response? A generic suggestion to "add a loading state" and some boilerplate that completely misses the actual problem.

Sound familiar?

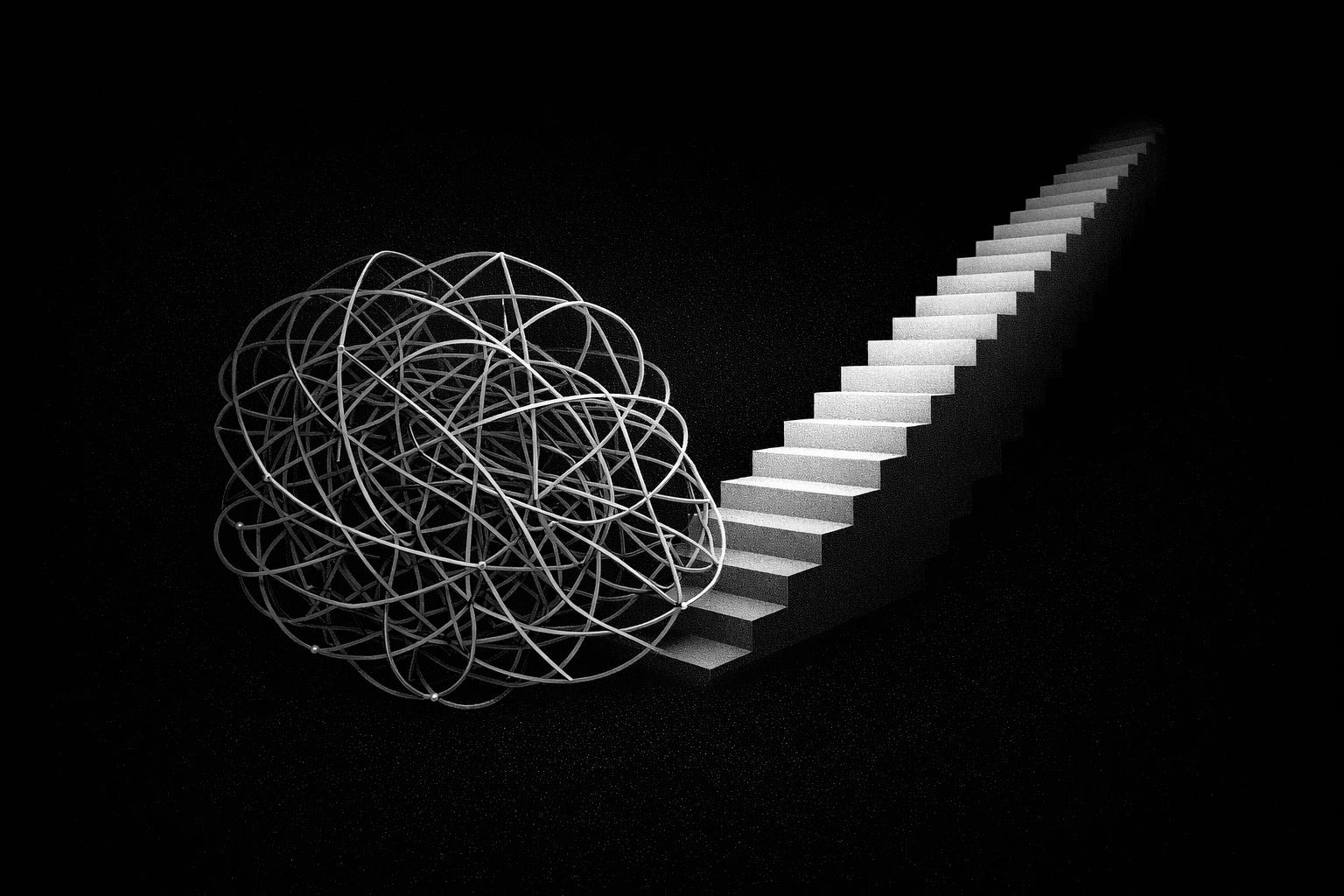

Standard prompting works fine for simple tasks—generate a button component, write a utility function, convert this to TypeScript. But the moment you need the AI to reason through something complex—debug a multi-step data flow, architect a state management solution, figure out why your component re-renders seventeen times—it falls apart.

This is where Chain-of-Thought prompting changes the game.

Chain-of-Thought (CoT) prompting makes AI models show their work. Instead of jumping straight from question to answer, you guide the model to generate intermediate reasoning steps—breaking complex problems into smaller pieces before arriving at a solution.

Google Research introduced this formally in January 2022, and the results were striking. On math word problems, accuracy jumped from 17.9% to 56.9%—a 218% improvement on complex reasoning tasks.

Here's the thing: you already think this way.

When you're debugging that race condition, you don't just stare at the code and wait for enlightenment. You trace the data flow. You check when the effect runs. You verify what state exists at each point. You reason through it step by step.

Zero-shot CoT—simply adding "Let's think step by step" to your prompt—activates reasoning patterns the model already has. The model knows how to reason; it learned these patterns during pre-training. The prompt just gives it permission to show that reasoning rather than jumping straight to an answer. For frontend work, though, you'll often want more structure.

Standard prompting treats every request the same way: input goes in, output comes out, and whatever happens in between is a black box.

For simple tasks, this works. "Create a React component that displays a user's name" doesn't require deep reasoning. The pattern is clear, the output is predictable.

But ask for something more complex, and you start seeing gaps. The AI isn't stupid. It's just not thinking through the problem.

Standard prompt: "Create a React component that fetches user data from an API and displays it"

Result: A basic component with a useEffect, maybe useState for the data, and... that's it. No loading state, no error handling, no cleanup.

CoT prompt: "Create a React component that fetches user data from an API. Think through this step-by-step: 1) What states do we need? 2) When should we fetch data? 3) How do we handle errors? 4) What should we show during loading? 5) How do we handle cleanup if the component unmounts? Then write the component with TypeScript and proper error handling."

Result: A component with loading, error, and success states. Proper TypeScript types. Conditional rendering for each state. An AbortController for cleanup.

Same task. Dramatically different output quality.

Say you need a form validation system:

Create a form validation system for a React signup form.

Walk through the validation logic:

1. What fields need validation? (email, password, confirm password)

2. What are the validation rules for each?

3. When should validation run? (on blur, on submit, on change?)

4. How do we display errors?

5. What about async validation (checking if email exists)?

Then implement with TypeScript and custom hooks.

This produces validation logic that handles synchronous and async rules, proper error messaging, and appropriate timing—not just a basic regex check. Why does this work so much better? Because you've forced the AI to consider timing strategy, error UX, and the async edge case before it writes a single line of code. A simple "build me a form validator" request skips all that reasoning, and you get code that validates on every keystroke with no debouncing and no server-side email check.

Instead of requesting direct fixes ("fix this code"), structure prompts to walk the model through diagnostic reasoning:

This React component has state that isn't updating in the UI.

Debug step-by-step:

1. Provide complete context: Component code, error messages, and expected behavior

2. Explain the state update mechanism before proposing solutions

3. Reason through common state pitfalls like stale closures or direct mutations

4. Verify by walking through the updated code's execution path

[paste your component]

Explicit reasoning steps let you verify the AI's logic and identify where it goes wrong. This is where CoT really shines for debugging—you can watch the AI trace through the problem and catch the exact moment its reasoning breaks down. When it walks through stale closure possibilities and incorrectly dismisses them, you see it happen. That visibility turns a frustrating "why did it suggest that?" into an actionable "ah, it missed that the callback captures the old state value."

For multi-step user flows or intricate state management:

Build a multi-step checkout wizard in React.

Plan the architecture:

1. What's the state structure? (current step, form data, validation status)

2. How do we handle navigation between steps?

3. What happens if a user goes back and changes earlier data?

4. How do we persist partial progress?

5. What's the error recovery strategy?

Then implement with proper component composition.

This produces architecturally sound code with clear separation of concerns—state logic that actually makes sense rather than spaghetti spread across components. Without these explicit planning steps, AI tends to dump everything into one component with useState calls scattered everywhere and no clear data flow. The structured prompt prevents that common failure mode.

CoT isn't free. It generates 2-5x more tokens than standard prompting and typically costs 3-5x more per request. The question isn't whether it's better—it's whether it's worth it for your specific task.

Use CoT when:

Skip CoT when:

CoT provides minimal benefit on simple tasks. Save it for problems that actually require multi-step reasoning.

Let's be direct about the tradeoffs.

Token costs increase 3-5x. CoT prompts generate longer outputs because they include all those reasoning steps. More tokens means more money.

Latency increases significantly. For interactive features requiring sub-second responses, this can be a dealbreaker. However, AWS's Chain-of-Draft architecture can reduce latency by 78% while maintaining accuracy by constraining reasoning steps to just a few words.

CoT doesn't eliminate hallucinations. While CoT may reduce hallucination frequency, it paradoxically makes incorrect information harder to detect. The elaborate reasoning chains make wrong answers appear more plausible. Detailed step-by-step reasoning can make you nod along even when a step is fundamentally flawed. The confident tone of "First, we do X because Y" tricks you into accepting the premise. Always verify critical logic steps independently—don't blindly trust the output just because it shows its work.

Model size matters. CoT requires models with at least 100 billion parameters to work effectively. Smaller models show minimal improvement.

Cost mitigation exists. Chain-of-Draft achieves 75% token reduction while maintaining accuracy. Prompt caching can save up to 90% on repeated prompts. Model routing—using smaller models for simple tasks, larger models for complex ones—provides strategic cost reduction.

You don't need new tools to start using CoT. You need different prompts.

Start with zero-shot CoT for complex reasoning. Add "Let's think step by step" or "Walk through this systematically" to your existing prompts. This works best for tasks requiring three or more logical steps.

Create reusable templates for recurring patterns. For debugging, code review, and architecture decisions, store CoT templates in your tools: Continue's markdown-based prompt templates, Cursor's .cursorrules files, or LangChain.js chains for programmatic workflows. Drop your CoT debugging template into .cursorrules and every time you invoke Cursor's chat with a bug, it automatically includes your step-by-step diagnostic framework.

Use your existing tools. GitHub Copilot, Cursor, and Continue all support CoT prompting through their chat interfaces and configuration systems. For programmatic implementations, LangChain.js and LlamaIndex.ts both have TypeScript support and npm packages ready to install.

Monitor and iterate. Track whether your CoT prompts justify the added cost. CoT delivers 2-3x accuracy improvements on complex reasoning but introduces 3-5x cost increases—benchmark regularly to ensure the tradeoff makes sense for your use case.

The pattern across successful implementations is consistent: detailed context, explicit reasoning steps, and clear output expectations. Strapi's engineering team reports near-zero iterative revision loops when using well-structured CoT prompts. What does that mean in practice? The AI catches potential bugs during the reasoning phase before writing code, so engineers stopped playing ping-pong with "fix this" → "still broken" → "fix that too" cycles.

Chain-of-Thought prompting isn't magic. It's a technique that makes AI assistants reason through problems the way you already do—step by step, considering edge cases, thinking before acting.

For simple tasks, it's overkill. For complex debugging, architecture decisions, and intricate multi-step logic, it delivers major improvements—up to 218% accuracy gains on reasoning benchmarks while introducing 3-5x cost increases.

The models are already capable of this reasoning. The prompts just need to ask for it.

Sergey Kaplich