OpenClaw: AI Agent That Ships Code While You Sleep (2026)

Bradley Herman

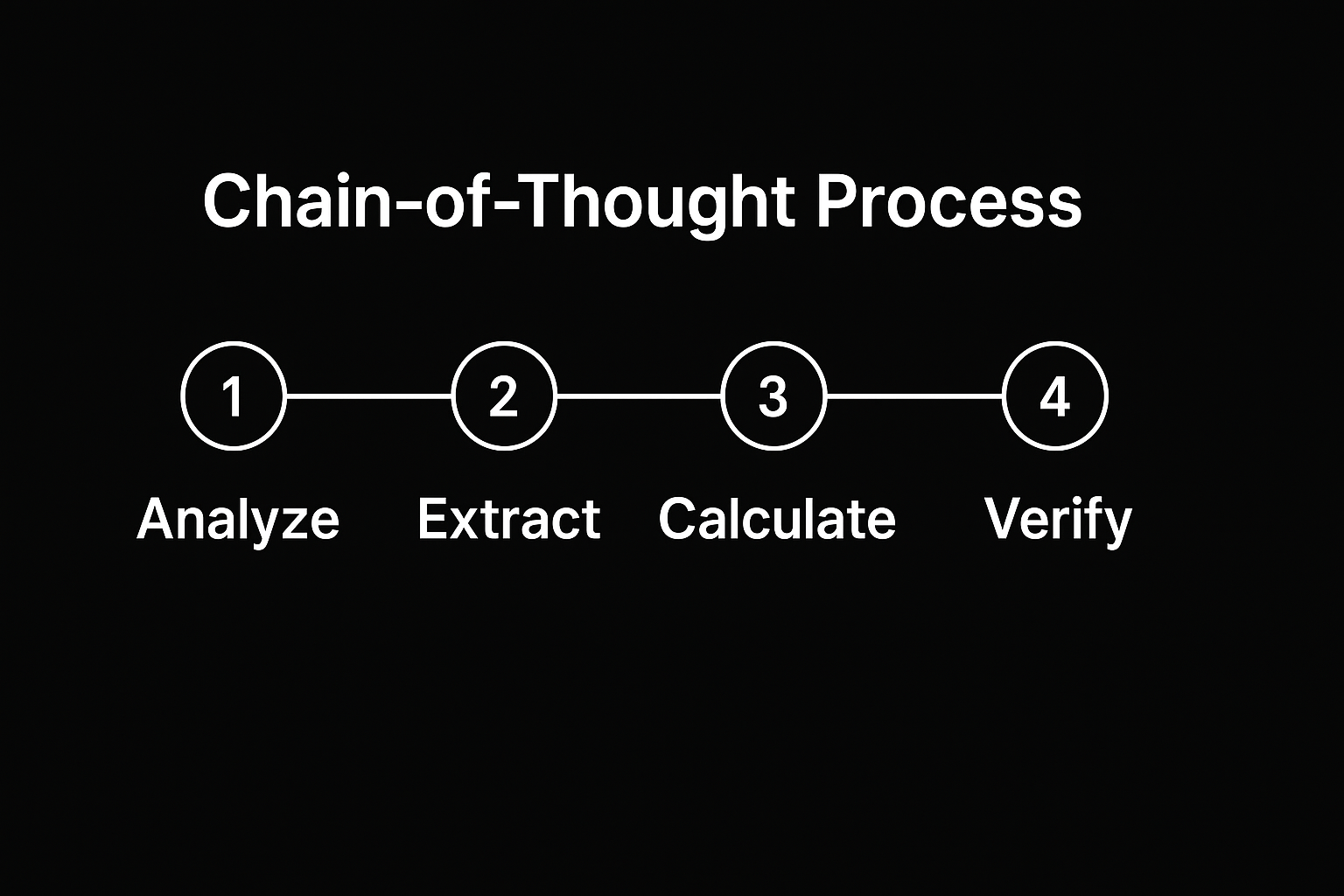

Chain-of-Thought prompting guides AI models to break down complex problems into sequential reasoning steps, showing their work like a student solving a math problem. This technique can improve accuracy by up to 28% on complex tasks, but requires careful implementation, especially with newer AI models that handle reasoning differently than older ones.

Chain-of-Thought prompting makes AI models think out loud. Instead of jumping straight to an answer, you ask the model to work through a problem step-by-step, revealing its reasoning process along the way.

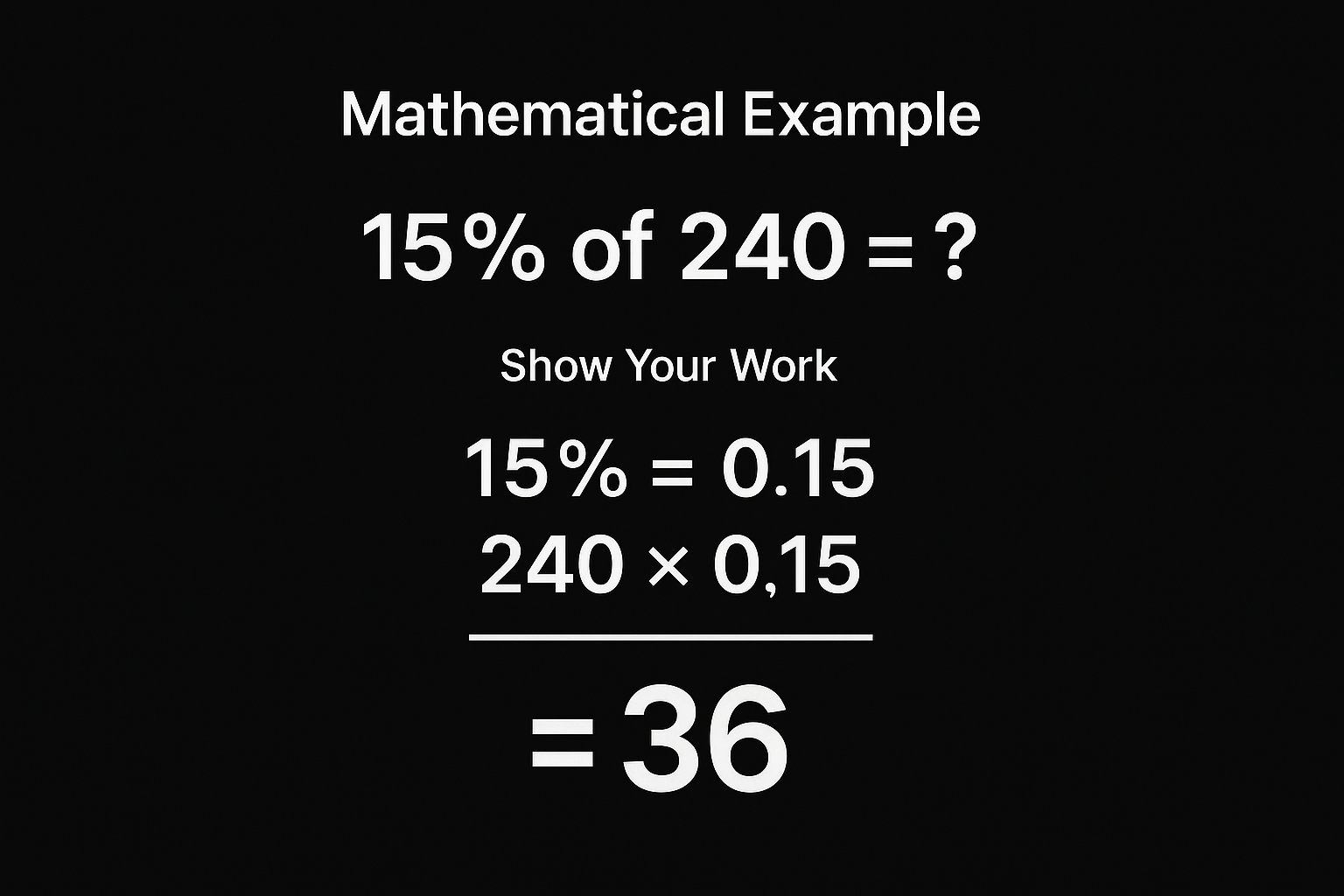

Think of it like the difference between asking someone "What's 15% of 240?" and asking "What's 15% of 240? Show your work." The second approach gives you both the answer (36) and the steps: convert 15% to 0.15, then multiply 240 × 0.15 = 36. This transparency helps you catch errors and builds confidence in the result.

CoT prompting addresses three critical challenges in production AI systems:

Accuracy improvements: Research shows up to 28.2% better performance on complex reasoning tasks compared to standard prompting. For applications involving mathematical calculations, data analysis, or multi-step decision-making, this improvement can be the difference between a useful tool and an unreliable one.

Better debugging: When an AI gives you a wrong answer, CoT shows you exactly where the reasoning went off track. Instead of a mysterious black box, you get a traceable thought process you can analyze and fix.

User trust: In high-stakes applications like financial analysis or healthcare decision support, users need to understand how the AI reached its conclusions. CoT provides this transparency automatically.

// With non-reasoning models requires manual step-by-step prompting

const response = await aiModel.generate({

prompt: `

Solve this step by step:

Problem: What is the compound interest on $1000 at 5% annually for 3 years?

Think through this step-by-step:

1. Identify the compound interest formula

2. Substitute the given values

3. Calculate each year's growth

4. Show the final result

`

});Reasoning models with internal CoT handling work differently:

// Don't add explicit CoT instructions with reasoning models

const response = await reasoningModel.generate({

prompt: "What is the compound interest on $1000 at 5% annually for 3 years?"

});CoT prompting isn't free. Understanding the cost implications helps you deploy reasoning workflows strategically:

For production applications, many teams use a hybrid approach. You can deploy reasoning models for complex planning tasks and standard models for simple execution, achieving 50-70% cost savings with minimal accuracy loss.

Chain-of-thought shines in specific scenarios but can actually hurt performance in others. Here's when to use CoT for maximum impact:

Use CoT for:

Skip CoT for:

Real-world example: A financial analysis tool saw 40% better accuracy using CoT for investment risk calculations, but using CoT for simple data lookups slowed responses by 200% with no accuracy gain.

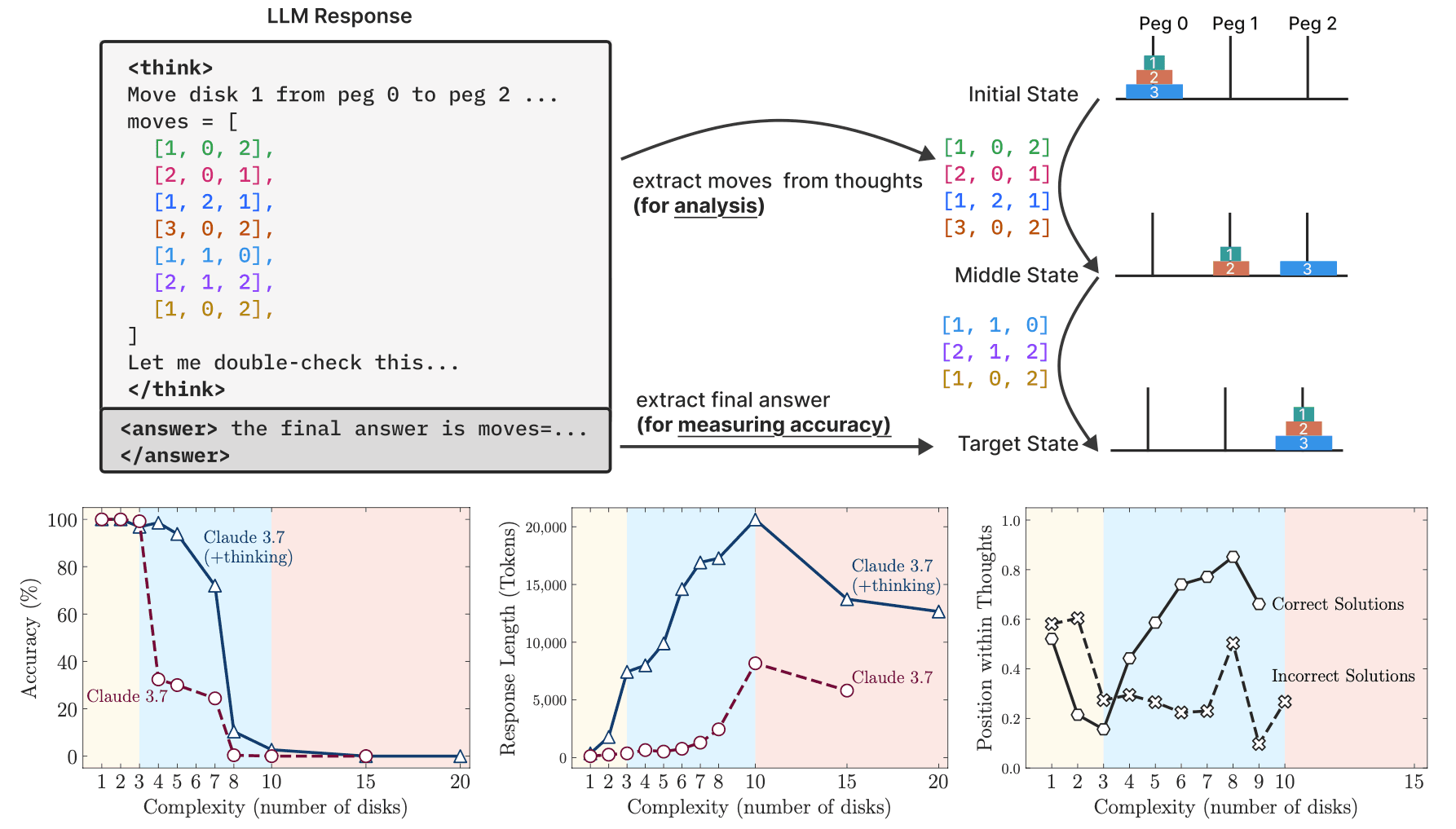

"CoT always improves AI performance": This isn't true. CoT can actually hurt performance on simple tasks or with smaller models. Research from Apple Machine Learning shows that reasoning models can experience "complete accuracy collapse beyond certain complexities."

"The reasoning steps show how the AI actually thinks": The visible reasoning chain might not reflect the model's actual internal processing. Research from Anthropic explicitly states that "reasoning models don't always accurately reflect their thought processes."

"CoT is cost-neutral": Far from it. CoT significantly increases token usage and computational requirements. According to IBM's analysis, generating reasoning chains requires more computational resources than standard prompting. Budget accordingly and measure whether the accuracy improvements justify the additional costs.

Sergey Kaplich